Nodes Browser

ComfyDeploy: How ComfyUI Griptape Nodes works in ComfyUI?

What is ComfyUI Griptape Nodes?

This repo creates a series of nodes that enable you to utilize the [a/Griptape Python Framework](https://github.com/griptape-ai/griptape/) with ComfyUI, integrating AI into your workflow. This repo creates a series of nodes that enable you to utilize the Griptape Python Framework with ComfyUI, integrating AI into your workflow.

How to install it in ComfyDeploy?

Head over to the machine page

- Click on the "Create a new machine" button

- Select the

Editbuild steps - Add a new step -> Custom Node

- Search for

ComfyUI Griptape Nodesand select it - Close the build step dialig and then click on the "Save" button to rebuild the machine

ComfyUI Griptape Nodes

This repo creates a series of nodes that enable you to utilize the Griptape Python Framework with ComfyUI, integrating LLMs (Large Language Models) and AI into your workflow.

Instructions and tutorials

Watch the trailer and all the instructional videos on our YouTube Playlist.

The repo currently has a subset of Griptape nodes, with more to come soon. Current nodes can:

-

Create Agents that can chat using these models:

- Local - via Ollama and LM Studio

- Llama 3

- Mistral

- etc..

- Via Paid API Keys

- OpenAI

- Azure OpenAI

- Amazon Bedrock

- Cohere

- Google Gemini

- Anthropic Claude

- Hugging Face (Note: Not all models featured on the Hugging Face Hub are supported by this driver. Models that are not supported by Hugging Face serverless inference will not work with this driver. Due to the limitations of Hugging Face serverless inference, only models that are than 10GB are supported.)

- Local - via Ollama and LM Studio

-

Control agent behavior and personality with access to Rules and Rulesets.

-

Give Agents access to Tools:

-

Run specific Agent Tasks:

-

Generate Images using these models:

- OpenAI

- Amazon Bedrock Stable Diffusion

- Amazon Bedrock Titan

- Leonardo.AI

-

Audio

- Transcribe Audio

- Text to Voice

Ultimate Configuration

Use nodes to control every aspect of the Agents behavior, with the following drivers:

- Prompt Driver

- Image Generation Driver

- Embedding Driver

- Vector Store Driver

- Text to Speech Driver

- Audio Transcription Driver

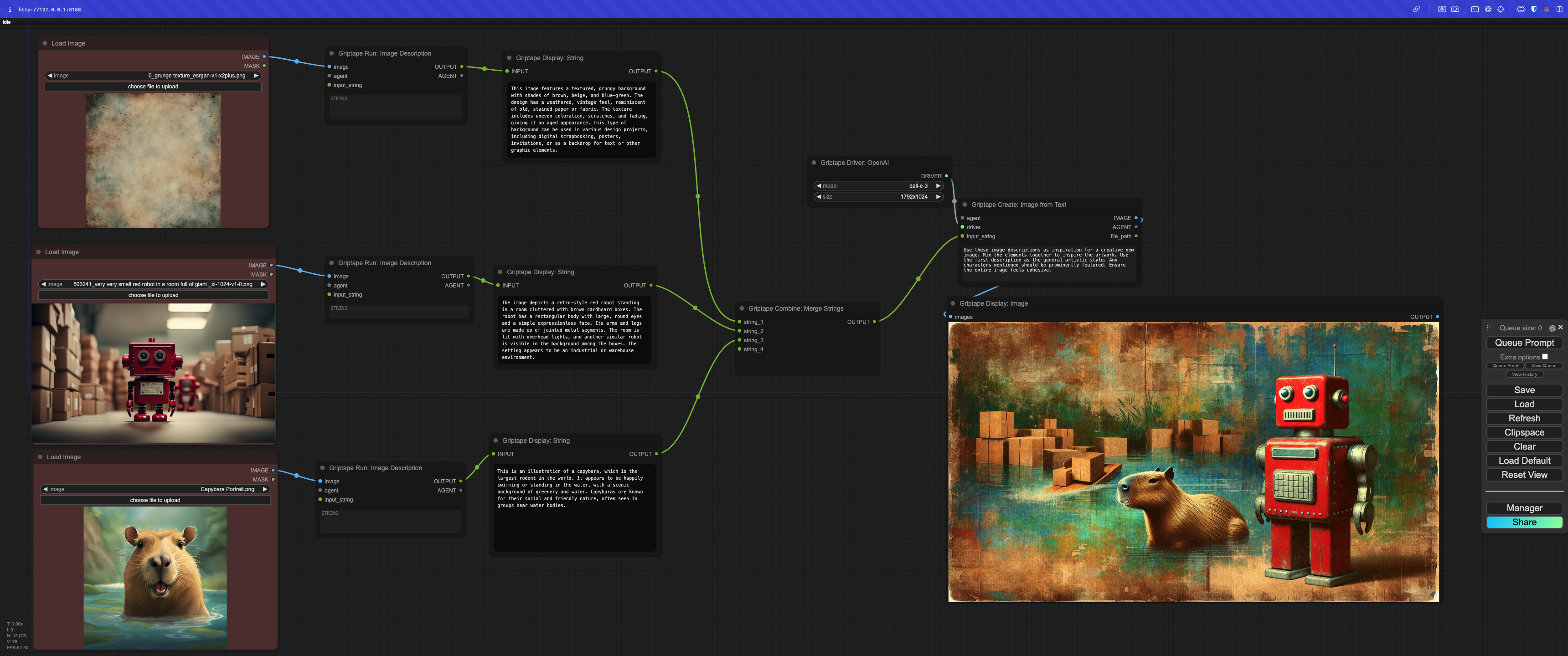

Example

In this example, we're using three Image Description nodes to describe the given images. Those descriptions are then Merged into a single string which is used as inspiration for creating a new image using the Create Image from Text node, driven by an OpenAI Driver.

The following image is a workflow you can drag into your ComfyUI Workspace, demonstrating all the options for configuring an Agent.

More examples

You can previous and download more examples here.

Using the nodes - Video Tutorials

- Installation: https://youtu.be/L4-HnKH4BSI?si=Q7IqP-KnWug7JJ5s

- Griptape Agents: https://youtu.be/wpQCciNel_A?si=WF_EogiZRGy0cQIm

- Controlling which LLM your Agents use: https://youtu.be/JlPuyH5Ot5I?si=KMPjwN3wn4L4rUyg

- Griptape Tools - Featuring Task Memory and Off-Prompt: https://youtu.be/TvEbv0vTZ5Q

- Griptape Rulesets, and Image Creation: https://youtu.be/DK16ouQ_vSs

- Image Generation with multiple drivers: https://youtu.be/Y4vxJmAZcho

- Image Description, Parallel Image Description: https://youtu.be/OgYKIluSWWs?si=JUNxhvGohYM0YQaK

- Audio Transcription: https://youtu.be/P4GVmm122B0?si=24b9c4v1LWk_n80T

- Using Ollama as a Configuration Driver: https://youtu.be/jIq_TL5xmX0?si=ilfomN6Ka1G4hIEp

- Combining Rulesets: https://youtu.be/3LDdiLwexp8?si=Oeb6ApEUTqIz6J6O

- Integrating Text: https://youtu.be/_TVr2zZORnA?si=c6tN4pCEE3Qp0sBI

- New Nodes & Quality of life improvements: https://youtu.be/M2YBxCfyPVo?si=pj3AFAhl2Tjpd_hw

- Merge Input Data: https://youtu.be/wQ9lKaGWmZo?si=FgboU5iUg82pXRkC

- Setting default agent configurations: https://youtu.be/IkioCcldEms?si=4uUu-y9UvIJWVBdE

- Merge Text with dynamic inputs and custom separator: https://youtu.be/1fHAzKVPG4M?si=6JHe1NA2_a_nl9rG

- Multiple Image Descriptions and Local Multi-Modal Models: https://youtu.be/KHz7CMyOk68?si=oQXud6NOtNHrXLez

- WebSearch Node Now Allows for Driver Functionality in Griptape Nodes: https://youtu.be/4_dkfdVUnRI?si=DA4JvegV0mdHXPDP

- Persistent Display Text: https://youtu.be/9229bN0EKlc?si=Or2eu3Nuh7lxgfEU

- Convert an Agent to a Tool.. and give it to another Agent: https://youtu.be/CcRot5tVAU8?si=lA0v5kDH51nYWwgG

- Text-To-Speech Nodes: https://youtu.be/PP1uPkRmvoo?si=QSaWNCRsRaIERrZ4

- Update to Managing Your API Keys in ComfyUI Griptape Nodes: https://www.youtube.com/watch?v=v80A7rtIjko

Recent Changelog

Please view recent changes here.

Installation

1. ComfyUI

Install ComfyUI using the instructions for your particular operating system.

2. Use Ollama

If you'd like to run with a local LLM, you can use Ollama and install a model like llama3.

-

Download and install Ollama from their website: https://ollama.com

-

Download a model by running

ollama run <model>. For example:ollama run llama3 -

You now have ollama available to you. To use it, follow the instructions in this YouTube video: https://youtu.be/jIq_TL5xmX0?si=0i-myC6tAqG8qbxR

3. Install Griptape-ComfyUI

There are two methods for installing the Griptape-ComfyUI repository. You can either download or git clone this repository inside the ComfyUI/custom_nodes, or use the ComfyUI Manager.

-

Option A - ComfyUI Manager (Recommended)

- Install ComfyUI Manager by following the installation instructions.

- Click Manager in ComfyUI to bring up the ComfyUI Manager

- Search for "Griptape"

- Find the ComfyUI-Griptape repo.

- Click INSTALL

- Follow the rest of the instructions.

-

Option B - Git Clone

-

Open a terminal and input the following commands:

cd /path/to/comfyUI cd custom_nodes git clone https://github.com/griptape-ai/ComfyUI-Griptape

-

4. Make sure libraries are loaded

Libraries should be installed automatically, but if you're having trouble, hopefully this can help.

There are certain libraries required for Griptape nodes that are called out in the requirements.txt file.

griptape[all]

python-dotenv

These should get installed automatically if you used the ComfyUI Manager installation method. However, if you're running into issues, please install them yourself either using pip or poetry, depending on your installation method.

-

Option A - pip

pip install "griptape[all]" python-dotenv -

Option B - poetry

poetry add "griptape[all]" python-dotenv

5. Restart ComfyUI

Now if you restart comfyUI, you should see the Griptape menu when you click with the Right Mouse button.

If you don't see the menu, please come to our Discord and let us know what kind of errors you're getting - we would like to resolve them as soon as possible!

6. Set API Keys

For advanced features, it's recommended to use a more powerful model. These are available from the providers listed bellow, and will require API keys.

-

To set an API key, click on the

Settingsbutton in the ComfyUI Sidebar. -

Select the

Griptapeoption. -

Scroll down to the API key you'd like to set and enter it.

Note: If you already have a particular API key set in your environment, it will automatically show up here.

You can get the appropriate API keys from these respective sites:

- OPENAI_API_KEY: https://platform.openai.com/api-keys

- GOOGLE_API_KEY: https://makersuite.google.com/app/apikey

- AWS_ACCESS_KEY_ID & SECURITY_ACCESS_KEY:

- Open the AWS Console

- Click on your username near the top right and select Security Credentials

- Click on Users in the sidebar

- Click on your username

- Click on the Security Credentials tab

- Click Create Access Key

- Click Show User Security Credentials

- LEONARDO_API_KEY: https://docs.leonardo.ai/docs/create-your-api-key

- ANTHROPIC_API_KEY: https://console.anthropic.com/settings/keys

- VOYAGE_API_KEY: https://dash.voyageai.com/

- HUGGINGFACE_HUB_ACCESS_TOKEN: https://huggingface.co/settings/tokens

- AZURE_OPENAI_ENDPOINT & AZURE_OPENAI_API_KEY: https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/switching-endpoints

- COHERE_API_KEY: https://dashboard.cohere.com/api-keys

- ELEVEN_LABS_API_KEY: https://elevenlabs.io/app/

- Click on your username in the lower left

- Choose Profile + API Key

- Generate and copy the API key

- GRIPTAPE_CLOUD_API_KEY: https://cloud.griptape.ai/configuration/api-keys

Troubleshooting

Torch issues

Griptape does install the torch requirement. Sometimes this may cause problems with ComfyUI where it grabs the wrong version of torch, especially if you're on Nvidia. As per the ComfyUI docs, you may need to unintall and re-install torch.

pip uninstall torch

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu121

Griptape Not Updating

Sometimes you'll find that the Griptape library didn't get updated properly. This seems to be especially happening when using the ComfyUI Manager. You might see an error like:

ImportError: cannot import name 'OllamaPromptDriver' from 'griptape.drivers' (C:\Users\evkou\Documents\Sci_Arc\Sci_Arc_Studio\ComfyUi\ComfyUI_windows_portable\python_embeded\Lib\site-packages\griptape\drivers\__init__.py)

To resolve this, you must make sure Griptape is running with the appropriate version. Things to try:

- Update again via the ComfyUI Manager

- Uninstall & Re-install the Griptape nodes via the ComfyUI Manager

- In the terminal, go to your ComfyUI directory and type:

python -m pip install griptape -U - Reach out on Discord and ask for help.

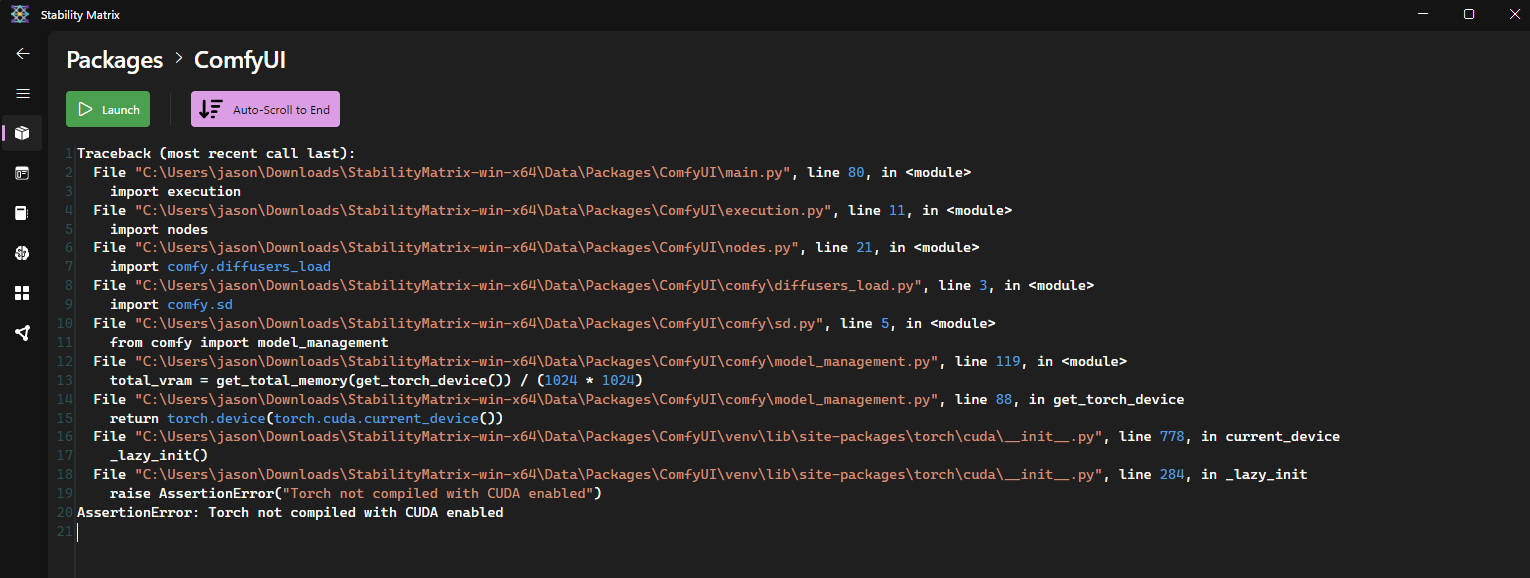

StabilityMatrix

If you are using StabilityMatrix to run ComfyUI, you may find that after you install Griptape you get an error like the following:

To resolve this, you'll need to update your torch installation. Follow these steps:

- Click on Packages to go back to your list of installed Packages.

- In the ComfyUI card, click the vertical

...menu. - Choose Python Packages to bring up your list of Python Packages.

- In the list of Python Packages, search for

torchto filter the list. - Select torch and click the

-button to uninstalltorch. - When prompted, click Uninstall

- Click the

+button to install a new package. - Enter the list of packages:

torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu121 - Click OK.

- Wait for the install to complete.

- Click Close.

- Launch ComfyUI again.

Thank you

Massive thank you for help and inspiration from the following people and repos!

- Jovieux from https://github.com/Amorano/Jovimetrix

- rgthree https://github.com/rgthree/rgthree-comfy

- IF_AI_tools https://github.com/if-ai/ComfyUI-IF_AI_tools/tree/main